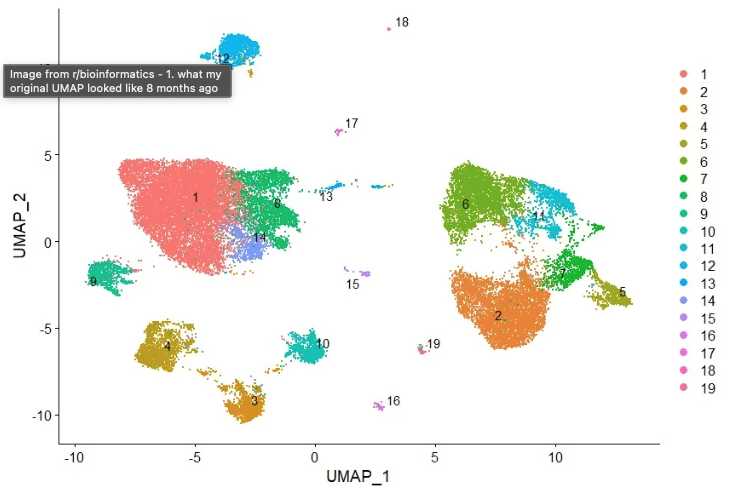

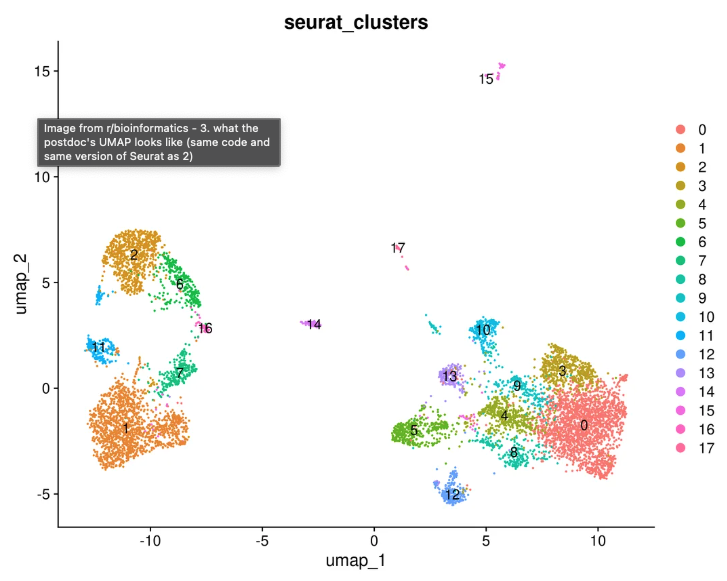

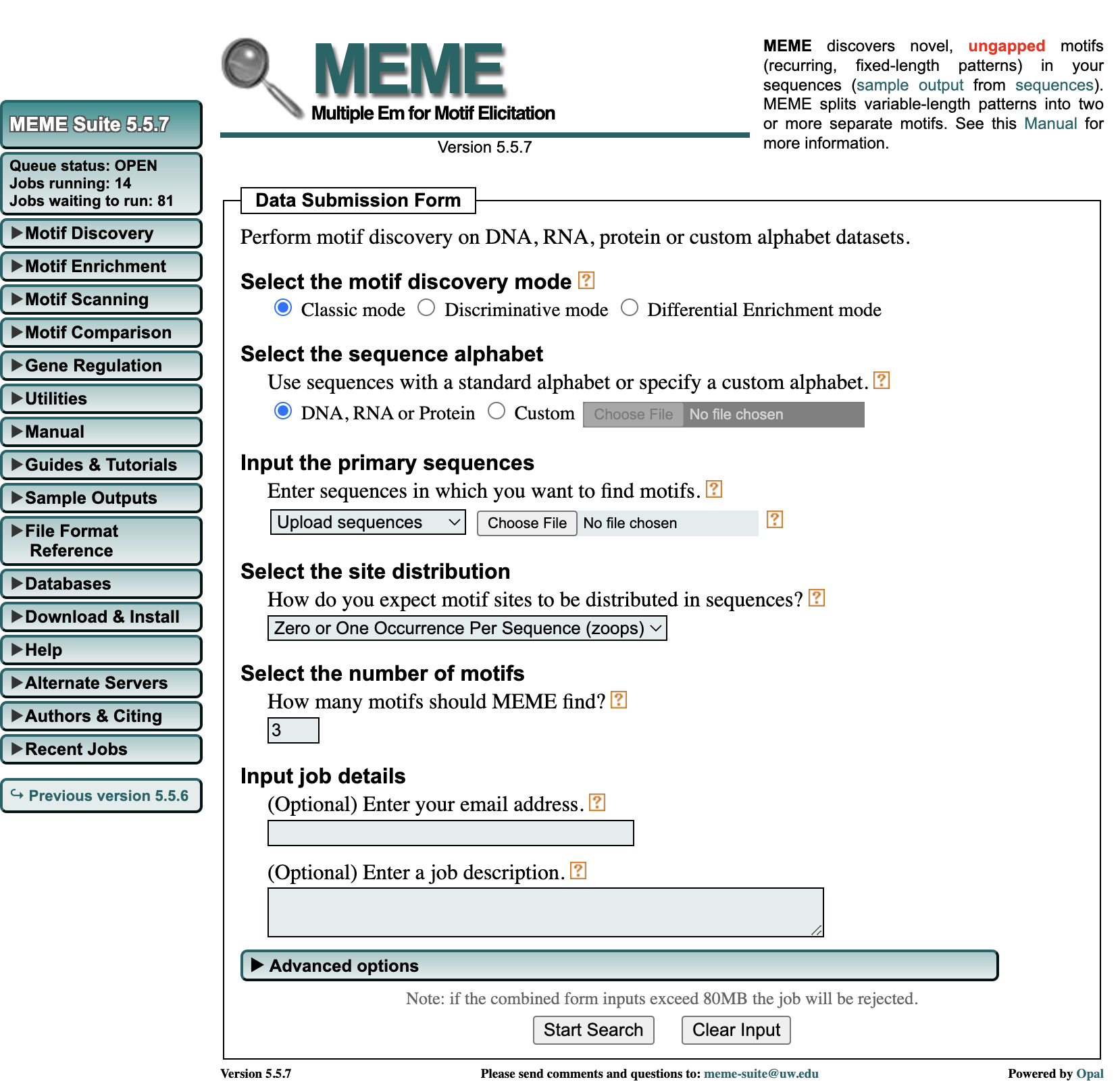

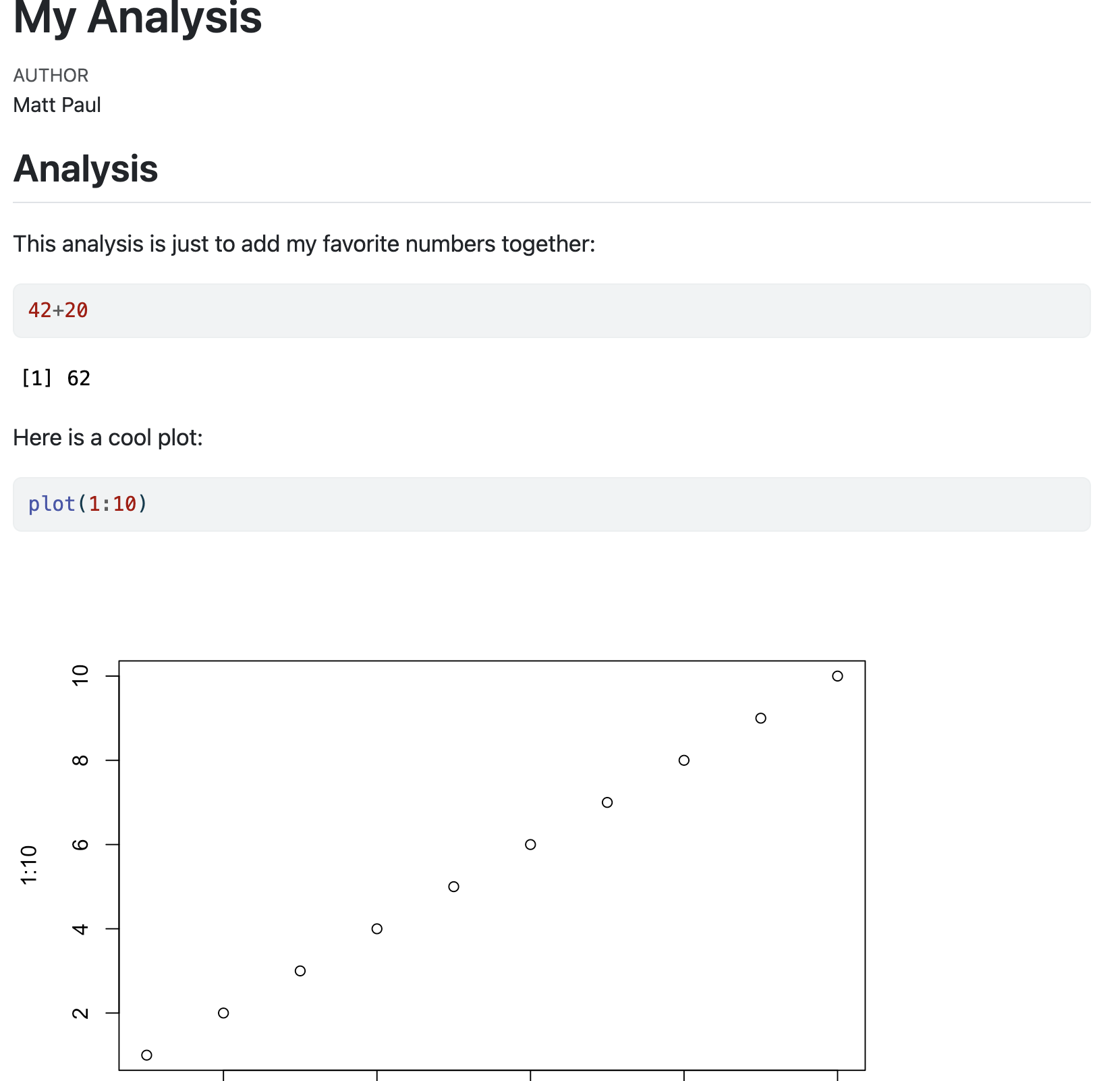

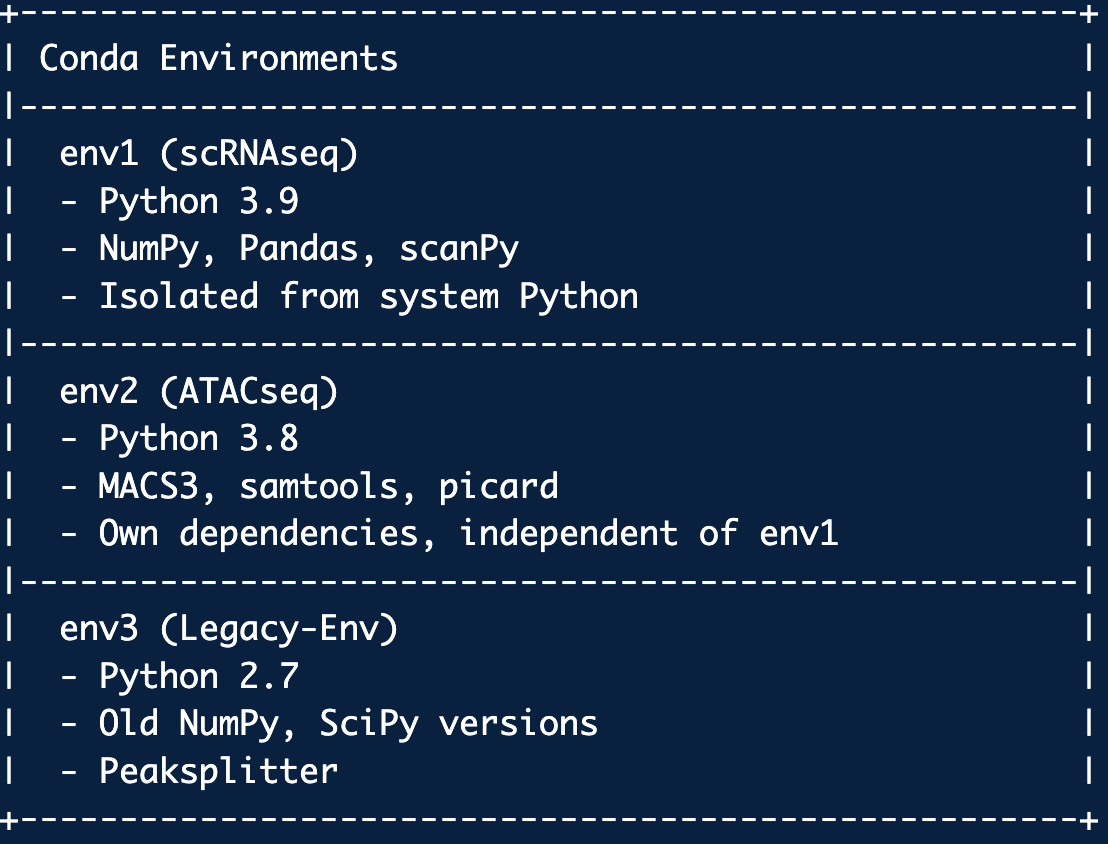

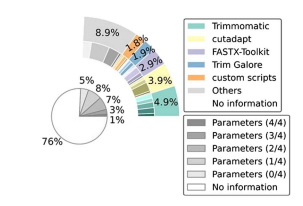

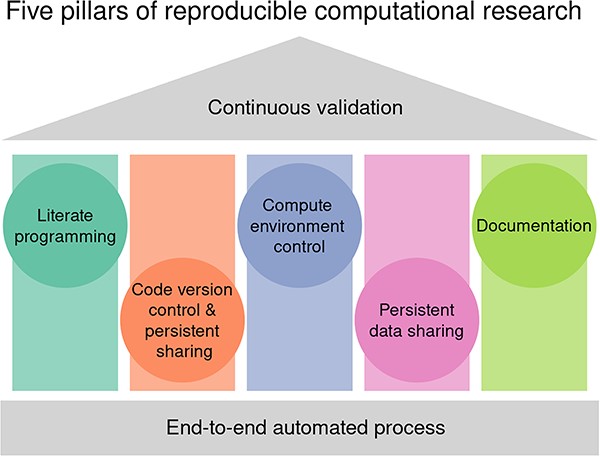

class: center, middle, inverse, title-slide .title[ # Reproducible Research<br /> <html><br /> <br /> <hr color='#EB811B' size=1px width=796px><br /> </html> ] .author[ ### Rockefeller University, Bioinformatics Resource Centre ] .date[ ### <a href="https://rockefelleruniversity.github.io/RU_reproducibleR/" class="uri">https://rockefelleruniversity.github.io/RU_reproducibleR/</a> ] --- # Reproducible Research Widely agreed that reproducibility is a cornerstone of good science. If a result cannot be validated are they reliable or even worthwhile? --- # What does Reproducibility mean? There is not a clear vision of the details though. A recent paper collated various definitions from papers. This snapshot shows they can be quite different: * Reproducibility is the ability to run software that produced figures * Reproducibility is establishing the validity of the reported findings with new data There is a confluence and mixture of the terms reproducibility, replicability and repeatibility that seems to cause this confusion. [(Gundersen, et al, 2020)](https://royalsocietypublishing.org/doi/pdf/10.1098/rsta.2020.0210) --- # What does Reproducibility mean? <div align="center"> <img src="imgs/reproducible-definition-grid.png" alt="repro" height="400" width="600"> </div> [The Turing Way](https://book.the-turing-way.org/reproducible-research/overview/overview-definitions) --- # Reproducibility and bioinformatics For bioinformatics we typically focus on ensure integrity of findings by ensuring the results can be re-found by repeating the analysis with the same data. Specifically in the field of bioinformatics there are many levels of focus for reproducibility. .pull-left[ What: * Data * Methods * Metadata * Code * Software ] .pull-right[ Who: * Individual * Project/Paper * Lab/Consortium ] --- # Reproducibility crisis This is a complex issue and enough care has not been taken to ensure results are reproducible. <div align="center"> <img src="imgs/repro_issues.jpg" alt="repro cancer biology" height="400" width="600"> </div> [(Errington, et al, 2021)](https://elifesciences.org/articles/67995) --- ## A common example This is an example from a bioinformatics forum. They have reran some code and they are getting different results. .pull-left[  ] .pull-right[  [Link](https://www.reddit.com/r/bioinformatics/comments/194ee1v/same_seurat_code_on_same_dataset_on_two_different/) ] --- ## When to be reproducible? In the world of 'publish or perish' there is an emphasis on expediency to wrap up and move on to the next project. Any friction to the process can seem like it is hampering your progress. As a result, being fully reproducible can seem like an obstacle rather than necessary and beneficial. Especially as most people do not think about it until the they are submitting a paper. But it is much easier if you start early. As with phrase goes: *"The best time to start was yesterday, the next best time is now"* --- # Current challenge Even if you have the good intentions and start early it can be tough as there is no consistent SOP for reproducibility (though several attempts have been [made](https://link.springer.com/article/10.1186/1471-2164-11-S4-S27)). Sometimes it seems like the wild west. But it can be inherently challenging to tackle when and how to be reproducible. It is clear: * Regularly used automated pipelines should have robust reproducibility. They are worth the time investment. * Quick one-line queries or plots of results (that wont be published) will not need to have a record of your system info, version numbers etc. They are not worth the time investment. There is a lot of formal custom analysis that falls between these two poles. --- # Where to start? There are many good guides. This training will be focusing on the practical elements of this. For more of the theory I like this [paper](https://academic.oup.com/bib/article/24/6/bbad375/7326135) which discusses an idea called the 5 pillars of reproducibility. We will quickly highlight some key aspects and how they relate to the practical elements we will cover. <div align="center"> <img src="imgs/pillars.jpeg" alt="pillars" height="300" width="500"> </div> --- # End-to-End Automation .pull-left[ All steps should be recorded. Ideally, when it comes to making final figures everything should be formalized in code. Interactive tools and web interfaces are great and good for exploratory anlysis i.e. IGV, MEME, DAVID, Loupe Browser, GSEA etc. These tools are incredibly hard to reproduce: no version numbers, hard to know reference data, tools will disappear if unsupported, easier to make a mistake etc. Hand in hand with this is the use of *Open Source Software*. ] .pull-right[  ] --- ## Literate programming .pull-left[ To take this up a level, every figure you produce should be tied to code and a contextual explanation. This is where reproducible reports become really important like Jupyter or Rmarkdown/Quarto. This direct link means you ensure provenance: you do not suffer from issues of copy and paste errors, or orphan png graphs that you don't entirely know how were made. Reports are also a great way to share analysis in progress as you can add descriptions just like a lab notebook. ] .pull-right[  ] --- ## Compute Environment Control .pull-left[ Software management occurs at many levels. Variation in results can arise simply from what computer OS you are using, let alone versions of packages and software. Keeping a record of this information is the minimum. There are systems to help you maintain multiple versions of R, Python and other software and along with multiple version of packages. We will introduce you to Renv, conda and Docker. These systems use environments to enable shareable software ecosytems, making it much easier for others (or yourself) to keep a record of your system and rerun analyses. ] .pull-right[  ] --- ## Code version control As analysis develops over time it becomes more and more important to have a way to control which versions of scripts are being used. Especially in a collaborative project. This is where systems like Git and GitHub come in. They allow you to keep record of changes you make while also allowing for sharing of the code. This can be public or private (and switched between the two). Environment configs can also be saved here to help ensure everyone is working in same way. Once you are ready to publish your project can be made publicly [accessible](https://github.com/RockefellerUniversity/SchneebergerPane_2024). --- ## Data We will not go into much detail about data, beyond this. Here are some key points: * Keep data from a project organized from the start. * When you publish you will need to deposit raw files and some processed data (i.e. counts, peaks etc.) onto a public database like GEO/SRA. * It is important to try and ensure that what you upload is machine readable and open source i.e. csv or txt files. We don't want weird or proprietary formats. * Use FAIR data principles (Findable, Accessible, Interoperable, Reusable). **ALWAYS MAKE SURE YOU HAVE RAW FILES BACKED UP!** --- ## Documentation .pull-left[ Writing methods can be a headache. But If you have followed the above steps than it will be a lot more straightforward. With the code well documented and with versions maintained and recorded you will have everything you need. The code can also be linked to the publication. One often overlooked part are the arguments. It is common to see: *Reads were mapped with bowtie*, when actually it should read: *Reads were mapped with bowtie allowing for 2 mismatches (-n 2)*. It should also be noted that argument default can change so listing all arguments in your code is best practice. ] .pull-right[ Trimming Methods - 1000 Papers  [Simoneau et al, 2019](https://academic.oup.com/bib/article/22/1/140/5669860) ] --- # Summary  --- # Resources * [5 pillars of reproducibility](https://academic.oup.com/bib/article/24/6/bbad375/7326135) * [What is reproducibility?](https://royalsocietypublishing.org/doi/pdf/10.1098/rsta.2020.0210) * [The Turing Way Book](https://book.the-turing-way.org/) * [RNAseq methods reporting is bad](https://academic.oup.com/bib/article/22/1/140/5669860)