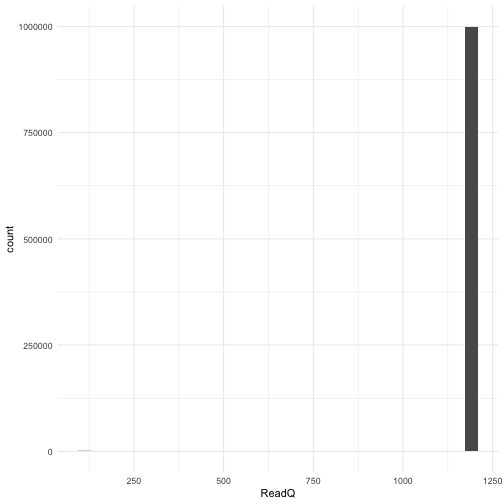

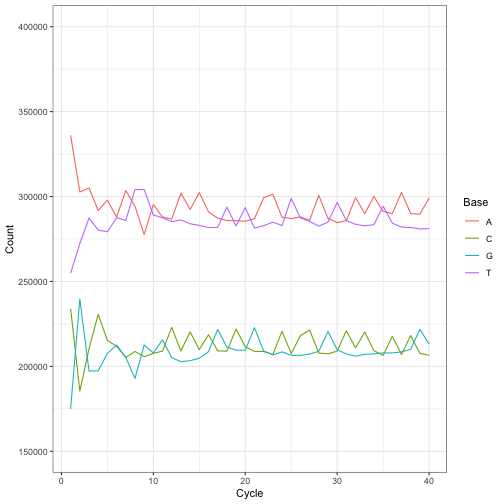

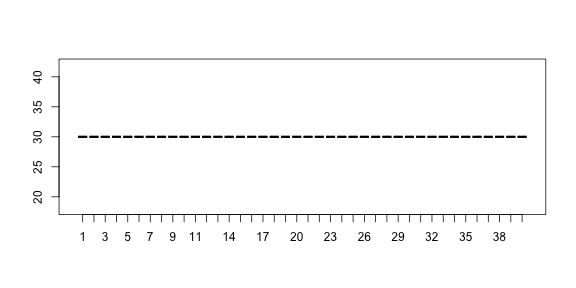

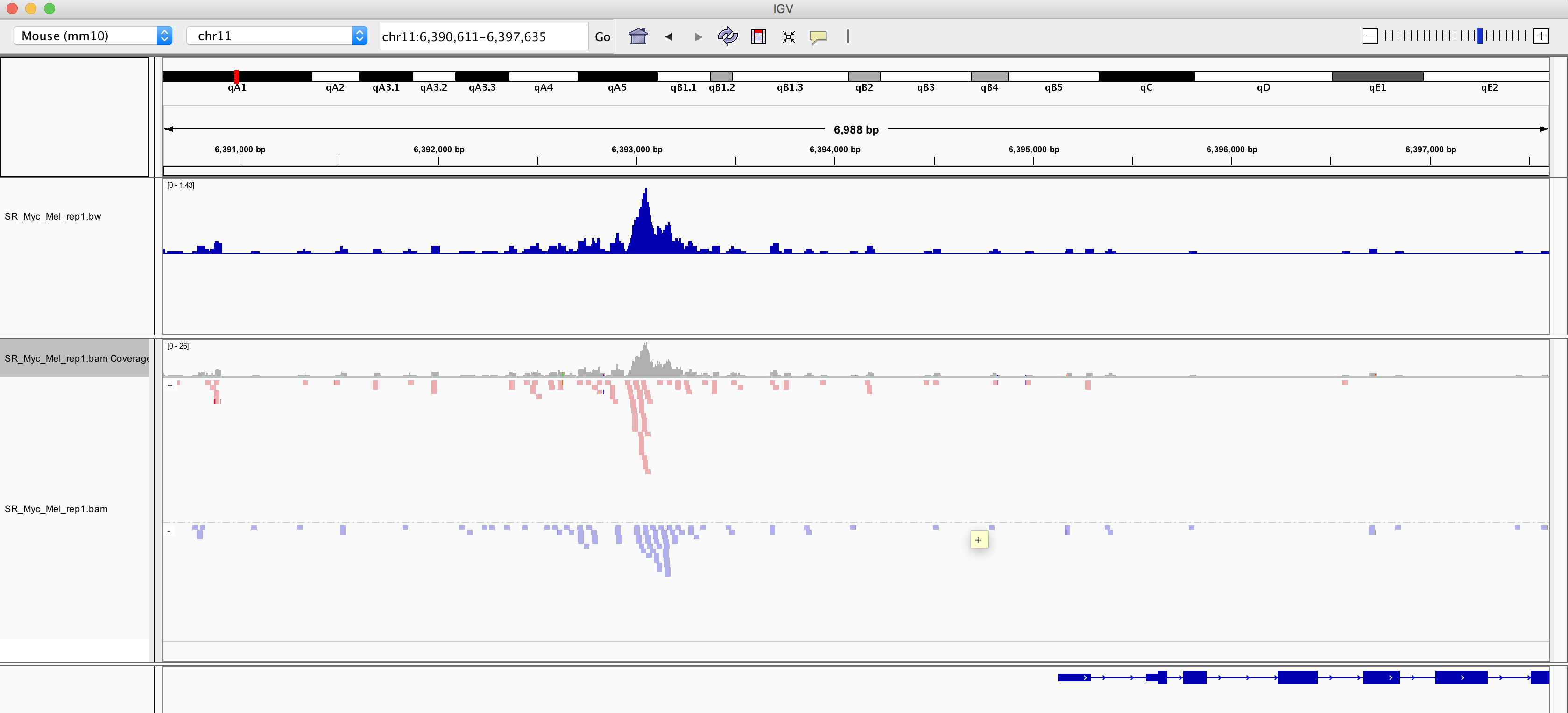

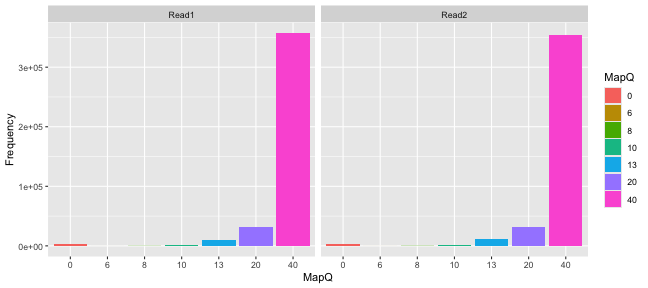

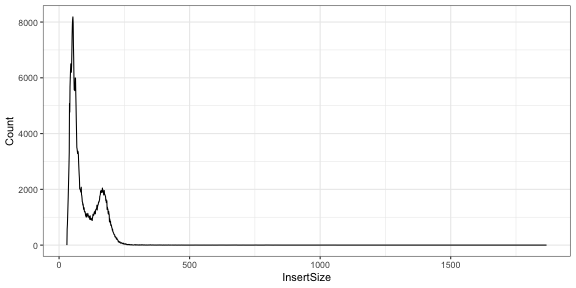

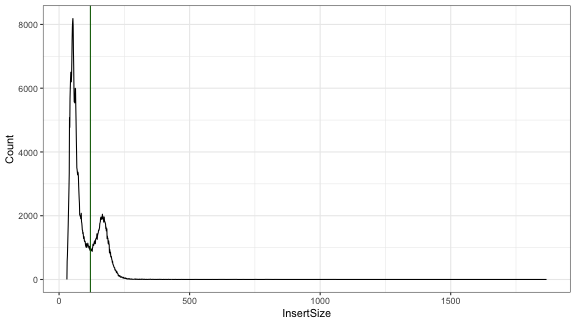

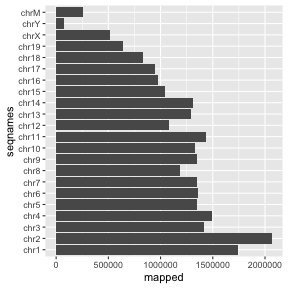

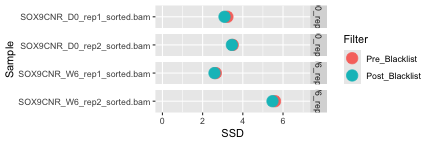

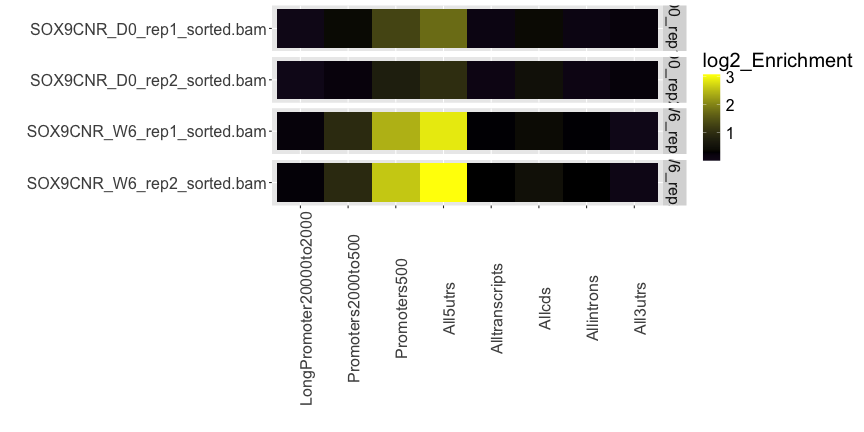

class: middle, inverse, title-slide .title[ # Cut-And-Run ] .subtitle[ ## <html><br /> <br /> <hr color='#EB811B' size=1px width=796px><br /> </html><br /> Bioinformatics Resource Center - Rockefeller University ] .author[ ### <a href="http://rockefelleruniversity.github.io/ATAC.Cut-Run.ChIP/" class="uri">http://rockefelleruniversity.github.io/ATAC.Cut-Run.ChIP/</a> ] .author[ ### <a href="mailto:brc@rockefeller.edu" class="email">brc@rockefeller.edu</a> ] --- ## Overview ??We often put an overview slide that links to the various parts of the course content to make it easy to navigate i.e. this one for intro to r?? - [Set up](https://rockefelleruniversity.github.io/Intro_To_R_1Day/r_course/presentations/singlepage/introToR_Session1.html#set-up) - [Background to R](https://rockefelleruniversity.github.io/Intro_To_R_1Day/r_course/presentations/singlepage/introToR_Session1.html#background-to-r) - [Data types in R](https://rockefelleruniversity.github.io/Intro_To_R_1Day/r_course/presentations/singlepage/introToR_Session1.html#data_types_in_r) - [Reading and writing in R](https://rockefelleruniversity.github.io/Intro_To_R_1Day/r_course/presentations/singlepage/introToR_Session1.html#reading-and-writing-data-in-r) --- class: inverse, center, middle # Set Up <html><div style='float:left'></div><hr color='#EB811B' size=1px width=720px></html> --- ## Materials ??We try to denote each major section of content with these title slides (see above for set up). The hierarchy will help with the different versions of the teaching content i.e. slides and single page.?? ?? Materials just gives the links to download the package Just ensure links are up to date?? All prerequisites, links to material and slides for this course can be found on github. * [CutAndRun](https://rockefelleruniversity.github.io/Intro_To_R_1Day/) Or can be downloaded as a zip archive from here. * [Download zip](https://github.com/rockefelleruniversity/Intro_To_R_1Day/zipball/master) --- ## Course materials ?? this slide just shows where thye can get the course content from the download. You do not need to populate these directories. It will be made during compilation?? Once the zip file in unarchived. All presentations as HTML slides and pages, their R code and HTML practical sheets will be available in the directories underneath. * **r_course/presentations/slides/** Presentations as an HTML slide show. * **r_course/presentations/singlepage/** Presentations as an HTML single page. * **r_course/presentations/r_code/** R code in presentations. * **r_course/exercises/** Practicals as HTML pages. * **r_course/answers/** Practicals with answers as HTML pages and R code solutions. --- ## Set the Working directory Before running any of the code in the practicals or slides we need to set the working directory to the folder we unarchived. You may navigate to the unarchived RU_Course_help folder in the Rstudio menu. **Session -> Set Working Directory -> Choose Directory** or in the console. ``` r setwd("/PathToMyDownload/RU_Course_template/r_course") # e.g. setwd('~/Downloads/Intro_To_R_1Day/r_course') ``` --- class: inverse, center, middle # ChIP Approaches <html><div style='float:left'></div><hr color='#EB811B' size=1px width=720px></html> --- ## Epigenomic Approaches There are a variety of genomics techniques to start to profile the epigenome of cells. They fit a few different classes: TF-bound/Histone Modifications: Cut and Run, Cut and Tag, ChIP-seq Open Regions: ATAC-seq, MNase-seq and DNase-seq Genome organization - Hi-C, HiCAR, Capture-C --- ## TF-bound/Histone Modifications <div align="center"> <img src="imgs/41596_2020_373_Fig1_HTML.png" alt="setup" height="400" width="600"> </div> [(Oker, et al, 2020)](https://www.nature.com/articles/s41596-020-0373-x) --- ## TF-bound/Histone Modifications * ChIP-seq - Strengths: Well established and validated across labs with robust antibody catalogs. - Weakness: High background, large cell input, potential artifacts from crosslinking/sonication, longest workflow. * Cut & Run - Strengths: High signal-to-noise ratio, low input required (100 to 100,000 cells/nuclei), good resolution (20-80bp), can be used with crosslinking for weak/transient interactions, fewer reads (3-8 million). - Weakness: Sensitive to sample quality and nuclei preparation. * Cut & Tag - Strengths: Highest signal-to-noise ratio, low input required (100 to 100,000 cells/nuclei), highest resolution (5-30bp), fewest reads (2 million). - Weakness: The Tn5 bias toward open chromatin possible and GC, can't be used with crosslinking so can be tricky with transient TFs. --- class: inverse, center, middle # Fastq QC <html><div style='float:left'></div><hr color='#EB811B' size=1px width=720px></html> --- ## The data Our raw sequencing data will be in FASTQ format. <div align="center"> <img src="imgs/fq1.png" alt="igv" height="200" width="600"> </div> --- ## The data For your own data you will typically receive a fastq from the genomics group you are working with. For published data the fastq will be available on GEO/SRA or ENA. For example we will be working today with data from the Fuchs lab: [*The pioneer factor SOX9 competes for epigenetic factors to switch stem cell fates*](https://www.nature.com/articles/s41556-023-01184-y) You can download the fastq files (if you want) directly from ENA: 1) [Cut and Run](https://www.ebi.ac.uk/ena/browser/view/PRJNA858098). We will work through how to run these early steps, but we will also provide intermediate files once we have completed some of the heavy processing as some of these steps will take too long to run in these sessions. --- ## Why QC? At this time we will want to review the QC of our data. We can look for features like: * Lack of library complexity * Adapter contamination * Poor sequencing quality --- ## How to QC There are two main approaches: 1) Import the fastq into R and directly check metrics. 2) Use QC software like [fastp](https://github.com/OpenGene/fastp) or [FastQC](https://github.com/s-andrews/FastQC). --- ## Working with raw epigenomics data Once we have downloaded the raw FASTQ data we can use the [ShortRead package](https://bioconductor.org/packages/release/bioc/html/ShortRead.html) to review our sequence data quality. We have more detailed course material on how to work with raw sequencing data in the [**FASTQ in Bioconductor** session.](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/FastQInBioconductor.html#1) First we load the [ShortRead library.](https://bioconductor.org/packages/release/bioc/html/ShortRead.html) ``` r library(ShortRead) ``` --- ## Working with raw data First we will review the raw sequencing reads using functions in the [ShortRead package.](https://bioconductor.org/packages/release/bioc/html/ShortRead.html). We do not need to review all reads in the file to can gain an understanding of data quality. We can simply review a subsample of the reads and save ourselves some time and memory. Note when we subsample we retrieve random reads from across the entire FASTQ file. This is important as FASTQ files are often ordered by their position on the sequencer. --- ## Reading a subsample We can subsample from a FASTQ file using functions in **ShortRead** package. Here we use the [**FastqSampler** and **yield** function](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/FastQInBioconductor.html#41) to randomly sample a defined number of reads from a FASTQ file. Here we subsample 1 million reads. This should be enough to have an understanding of the quality of the data. * NOTE: This step may take a little time, so you can skip it for now.* ``` r fqSample <- FastqSampler("~/Downloads/SOX9CNR_D0_rep1_R1.fastq.gz", n = 10^6) fastq <- yield(fqSample) ``` --- ## Reading a subsample We have provided a subsampled version of our fastq file here for you to read in directly. ``` r fastq <- readFastq(dirPath = "data/SOX9CNR_D0_rep1_R1_subsample.fastq.gz") ``` --- ## Working with raw CUTandRun data The resulting object is a [ShortReadQ object](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/FastQInBioconductor.html#10) showing information on the number of cycles, base pairs in reads, and number of reads in memory. ``` r fastq ``` ``` ## class: ShortReadQ ## length: 1000000 reads; width: 40 cycles ``` --- ## Raw CUTandRun data QC If we wished, we can assess information from the FASTQ file using [accessor functions.](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/FastQInBioconductor.html#15) * **sread()** - Retrieve sequence of reads. * **quality()** - Retrieve quality of reads as ASCII scores. * **id()** - Retrieve IDs of reads. ``` r readSequences <- sread(fastq) readQuality <- quality(fastq) readIDs <- id(fastq) readSequences ``` ``` ## DNAStringSet object of length 1000000: ## width seq ## [1] 40 CAACTGAATTTAGATAGGGAAGTAGCAGAAGGTTACATCT ## [2] 40 AGGTTTTTGGGGTAAAATGCTCGCATAAATGACGGATCCC ## [3] 40 CACTTCGTCTGCCAGCGGCGCAACGGCGTGGCGATGCCGG ## [4] 40 TCTGAGCTCATCGTAAGAAGGCATGGCAGCAAGGGTGTGG ## [5] 40 TCAGCTGTGGTGTGAGCTGCTTGCAAAAACATCGTGCTAG ## ... ... ... ## [999996] 40 GTACGTTAAAACAGCTGTTACACATGGATCTATGCACTTA ## [999997] 40 GTGAGAGGGTGCGCCAGAGAACCTGACAGCTTCTGGAACA ## [999998] 40 GAAGCTGTGTCATATGTCATGCTCTGGTTAAAGGTTAACT ## [999999] 40 TAGAGATGAAACCCATGTCTCAGAGCTCTTTCCTTCACAT ## [1000000] 40 TTGCATACATTAACTGGCTTGAGGTAACTATTATTTTTCC ``` --- ## Quality with raw CUTandRun data We can check some simple quality metrics for our subsampled FASTQ data. First, we can review the overall reads' quality scores. We use the [**alphabetScore()** function with our read's qualitys](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/FastQInBioconductor.html#28) to retrieve the sum quality for every read from our subsample. ``` r readQuality <- quality(fastq) readQualities <- alphabetScore(readQuality) readQualities[1:10] ``` ``` ## [1] 1200 1200 1200 1200 1200 1200 1200 1200 1200 1200 ``` --- ## Quality with raw CUTandRun data We can then produce a histogram of quality scores to get a better understanding of the distribution of scores. ``` r library(ggplot2) toPlot <- data.frame(ReadQ = readQualities) ggplot(toPlot, aes(x = ReadQ)) + geom_histogram() + theme_minimal() ``` ``` ## `stat_bin()` using `bins = 30`. Pick better value with `binwidth`. ``` <!-- --> --- ## Base frequency with raw CUTandRun data We can review the occurrence of DNA bases within reads and well as the occurrence of DNA bases across sequencing cycles using the [**alphabetFrequency()**](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/FastQInBioconductor.html#18) and [**alphabetByCycle()**](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/FastQInBioconductor.html#30) functions respectively. Here we check the overall frequency of **A, G, C, T and N (unknown bases)** in our sequence reads. ``` r readSequences <- sread(fastq) readSequences_AlpFreq <- alphabetFrequency(readSequences) readSequences_AlpFreq[1:3, ] ``` ``` ## A C G T M R W S Y K V H D B N - + . ## [1,] 15 5 10 10 0 0 0 0 0 0 0 0 0 0 0 0 0 0 ## [2,] 11 7 11 11 0 0 0 0 0 0 0 0 0 0 0 0 0 0 ## [3,] 5 14 15 6 0 0 0 0 0 0 0 0 0 0 0 0 0 0 ``` --- ## Base frequency with raw CUTandRun data Once we have the frequency of DNA bases in our sequence reads we can retrieve the sum across all reads. ``` r summed__AlpFreq <- colSums(readSequences_AlpFreq) summed__AlpFreq[c("A", "C", "G", "T", "N")] ``` ``` ## A C G T N ## 11744925 8497685 8344368 11412114 908 ``` --- ## Assess by cycle with raw CUTandRun data We can review DNA base occurrence by cycle using the [**alphabetByCycle()** function.](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/FastQInBioconductor.html#30) ``` r readSequences_AlpbyCycle <- alphabetByCycle(readSequences) readSequences_AlpbyCycle[1:4, 1:10] ``` ``` ## cycle ## alphabet [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10] ## A 336004 302683 305045 291736 297843 287897 303525 294275 277613 295295 ## C 233900 185367 210355 230639 215323 211798 205233 208795 205654 207707 ## G 174963 239691 197263 197345 207617 212597 205342 192901 212591 207859 ## T 254822 272252 287337 280280 279217 287564 285893 304026 304142 289135 ``` --- ## Assess by cycle with raw CUTandRun data We often plot this to visualise the base occurrence over cycles to observe any bias. First we arrange the base frequency into a data frame. ``` r AFreq <- readSequences_AlpbyCycle["A", ] CFreq <- readSequences_AlpbyCycle["C", ] GFreq <- readSequences_AlpbyCycle["G", ] TFreq <- readSequences_AlpbyCycle["T", ] toPlot <- data.frame(Count = c(AFreq, CFreq, GFreq, TFreq), Cycle = rep(1:40, 4), Base = rep(c("A", "C", "G", "T"), each = 40)) ``` --- ## Assess by cycle with raw CUTandRun data Now we can plot the frequencies using ggplot2 ``` r ggplot(toPlot, aes(y = Count, x = Cycle, colour = Base)) + geom_line() + theme_bw() ``` ``` r ggplot(toPlot, aes(y = Count, x = Cycle, colour = Base)) + geom_line() + ylim(150000, 4e+05) + theme_bw() ``` <!-- --> --- ## Assess by cycle with raw CUTandRun data We can also assess mean read quality over cycles. This will allow us to identify whether there are any issues with quality dropping off over time. For this we use the [**as(*read_quality*,"matrix")**](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/FastQInBioconductor.html#29) function first to translate our ASCII quality scores to numeric quality scores. ``` r qualAsMatrix <- as(readQuality, "matrix") qualAsMatrix[1:2, ] ``` ``` ## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10] [,11] [,12] [,13] [,14] [,15] [,16] ## [1,] 30 30 30 30 30 30 30 30 30 30 30 30 30 30 30 30 ## [2,] 30 30 30 30 30 30 30 30 30 30 30 30 30 30 30 30 ## [,17] [,18] [,19] [,20] [,21] [,22] [,23] [,24] [,25] [,26] [,27] [,28] [,29] [,30] ## [1,] 30 30 30 30 30 30 30 30 30 30 30 30 30 30 ## [2,] 30 30 30 30 30 30 30 30 30 30 30 30 30 30 ## [,31] [,32] [,33] [,34] [,35] [,36] [,37] [,38] [,39] [,40] ## [1,] 30 30 30 30 30 30 30 30 30 30 ## [2,] 30 30 30 30 30 30 30 30 30 30 ``` --- ## Assess by cycle with raw CUTandRun data We can now [visualise qualities across cycles using a boxplot.](https://rockefelleruniversity.github.io/Bioconductor_Introduction/exercises/answers/fastq_answers.html) ``` r boxplot(qualAsMatrix[1:1000, ]) ``` <!-- --> --- ## Assess by cycle with raw CUTandRun data In this case the distribution of reads quality scores and read qualities over time look okay. We will often want to access FASTQ samples together to see if any samples stick out by these metrics. Here we observed a second population of low quality scores so will remove some reads with low quality scores and high unknown bases. --- class: inverse, center, middle # Aligning CUT&RUN and ATACseq reads <html><div style='float:left'></div><hr color='#EB811B' size=1px width=720px></html> --- ## Aligning CUT&RUN and ATACseq reads Following assessment of read quality and any read filtering we applied, we will want to align our reads to the genome so as to identify any genomic locations showing enrichment for aligned reads above background. Since CUT&RUN and ATACseq reads will align continuously against our reference genome we can use [genomic aligners](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/AlignmentInBioconductor.html#7), as opposed to aligners that account for splice junctions (e.g. for RNAseq). The resulting BAM file will contain aligned sequence reads for use in further analysis. <div align="center"> <img src="imgs/sam2.png" alt="igv" height="200" width="700"> </div> --- ## Creating a reference genome First we need to retrieve the sequence information for the genome of interest in [FASTA format](https://rockefelleruniversity.github.io/Genomic_Data/presentations/slides/GenomicsData.html#9). We can use the [BSgenome libraries to retrieve the full sequence information.](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/SequencesInBioconductor.html#4) For the mouse mm10 genome we load the package **BSgenome.Mmusculus.UCSC.mm10**. ``` r library(BSgenome.Mmusculus.UCSC.mm10) BSgenome.Mmusculus.UCSC.mm10 ``` ``` ## | BSgenome object for Mouse ## | - organism: Mus musculus ## | - provider: UCSC ## | - genome: mm10 ## | - release date: Sep 2017 ## | - 239 sequence(s): ## | chr1 chr2 chr3 chr4 ## | chr5 chr6 chr7 chr8 ## | chr9 chr10 chr11 chr12 ## | chr13 chr14 chr15 chr16 ## | chr17 chr18 chr19 chrX ## | ... ... ... ... ## | chrX_KQ030495_fix chrX_KQ030496_fix chrX_KQ030497_fix chrX_KZ289092_fix ## | chrX_KZ289093_fix chrX_KZ289094_fix chrX_KZ289095_fix chrY_JH792832_fix ## | chrY_JH792833_fix chrY_JH792834_fix chr1_KK082441_alt chr11_KZ289073_alt ## | chr11_KZ289074_alt chr11_KZ289075_alt chr11_KZ289077_alt chr11_KZ289078_alt ## | chr11_KZ289079_alt chr11_KZ289080_alt chr11_KZ289081_alt ## | ## | Tips: call 'seqnames()' on the object to get all the sequence names, call 'seqinfo()' to ## | get the full sequence info, use the '$' or '[[' operator to access a given sequence, see ## | '?BSgenome' for more information. ``` --- ## Creating a reference genome We will only use the major chromosomes for our analysis so we may exclude random and unplaced contigs. Here we cycle through the major chromosomes and create a [**DNAStringSet** object from the retrieved sequences](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/SequencesInBioconductor.html#17). ``` r mainChromosomes <- paste0("chr", c(1:19, "X", "Y", "M")) mainChrSeq <- lapply(mainChromosomes, function(x) BSgenome.Mmusculus.UCSC.mm10[[x]]) names(mainChrSeq) <- mainChromosomes mainChrSeqSet <- DNAStringSet(mainChrSeq) mainChrSeqSet ``` ``` ## DNAStringSet object of length 22: ## width seq names ## [1] 195471971 NNNNNNNNNNNNNNNNNNNNNNNNNNN...NNNNNNNNNNNNNNNNNNNNNNNNNN chr1 ## [2] 182113224 NNNNNNNNNNNNNNNNNNNNNNNNNNN...NNNNNNNNNNNNNNNNNNNNNNNNNN chr2 ## [3] 160039680 NNNNNNNNNNNNNNNNNNNNNNNNNNN...NNNNNNNNNNNNNNNNNNNNNNNNNN chr3 ## [4] 156508116 NNNNNNNNNNNNNNNNNNNNNNNNNNN...NNNNNNNNNNNNNNNNNNNNNNNNNN chr4 ## [5] 151834684 NNNNNNNNNNNNNNNNNNNNNNNNNNN...NNNNNNNNNNNNNNNNNNNNNNNNNN chr5 ## ... ... ... ## [18] 90702639 NNNNNNNNNNNNNNNNNNNNNNNNNNN...NNNNNNNNNNNNNNNNNNNNNNNNNN chr18 ## [19] 61431566 NNNNNNNNNNNNNNNNNNNNNNNNNNN...NNNNNNNNNNNNNNNNNNNNNNNNNN chr19 ## [20] 171031299 NNNNNNNNNNNNNNNNNNNNNNNNNNN...NNNNNNNNNNNNNNNNNNNNNNNNNN chrX ## [21] 91744698 NNNNNNNNNNNNNNNNNNNNNNNNNNN...NNNNNNNNNNNNNNNNNNNNNNNNNN chrY ## [22] 16299 GTTAATGTAGCTTAATAACAAAGCAAA...CTCTATTACGCAATAAACATTAACAA chrM ``` --- # Creating a reference genome Now we have a **DNAStringSet** object we can use the [**writeXStringSet** to create our FASTA file of sequences to align to.](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/SequencesInBioconductor.html#22) ``` r writeXStringSet(mainChrSeqSet, "BSgenome.Mmusculus.UCSC.mm10.mainChrs.fa") ``` --- ## Creating an Rsubread index We will be aligning using the **seed-and-vote** algorithm from the folks behind subread. We can therefore use the **Rsubread** package. Before we attempt to align our FASTQ files, we will need to first build an index from our reference genome using the **buildindex()** function. The [**buildindex()** function simply takes the parameters of our desired index name and the FASTA file to build index from.](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/AlignmentInBioconductor.html#14) *NOTE: Building an index is memory intensive and by default is set to 8GB. This may be too large for your laptop or Desktop.* ``` r library(Rsubread) buildindex("mm10_mainchrs", "BSgenome.Mmusculus.UCSC.mm10.mainChrs.fa", memory = 8000, indexSplit = TRUE) ``` --- ## Aligning CUT&RUN Reads In the following slides we will focus on aligning the CUT&RUN data, but alignment of ATACseq data will be the same. In the post processing and peak calling section there are some considerations for each method that we will cover. CUT&RUN and ATACseq data are typically paired-end sequencing, and most often comes as two files typically with *_1* and *_2* or *_R1* and *_R2* in the file name to denote which number in pair a file is. The two matched paired-end read files will (most often) contain the same number of reads and the reads will be ordered the same in both files. --- ## Aligning Sequence Reads Below we read in the first 1000 reads in replicate 1 of the Week 6 CUT&RUN FASTQ file. The read names will match across files for paired reads with the exception of a 1 or 2 in name to signify if the read was first or second in a pair. ``` r require(ShortRead) # first 1000 reads in each file read1 <- readFastq("data/SOX9CNR_W6_rep1_1K_R1.fastq.gz") read2 <- readFastq("data/SOX9CNR_W6_rep1_1K_R2.fastq.gz") id(read1)[1:2] ``` ``` ## BStringSet object of length 2: ## width seq ## [1] 17 SRR20110409.9 9/1 ## [2] 19 SRR20110409.10 10/1 ``` ``` r id(read2)[1:2] ``` ``` ## BStringSet object of length 2: ## width seq ## [1] 17 SRR20110409.9 9/2 ## [2] 19 SRR20110409.10 10/2 ``` --- ## Aligning Sequence Reads Now that we have an index and our FASTQ files, we can perform the alignment. Our two FASTQ files that have been filtered with rfastp for QC metrics, and are [available on dropbox](https://www.dropbox.com/scl/fo/ktmtrypv57tpckk7ehmow/AN-f1t17gbBtFpxjdFK8WRY?rlkey=3dnog47inu8y0giqct1g0xy4q&st=zq28gbmj&dl=0) if you have built the index and want to try the alignment on your own. ``` r read1_toAlign <- "~/Downloads/SOX9CNR_W6_rep1_QC_R1.fastq.gz" read2_toAlign <- "~/Downloads/SOX9CNR_W6_rep1_QC_R2.fastq.gz" ``` --- ## Rsubread alignment of CUT&RUN We can align our raw sequence data in FASTQ format to the FASTA file of our mm10 genome sequence using the **Rsubread** package. Specifically we will be using the **align** function as this utilizes the subread genomic alignment algorithm. The [**align()** function accepts arguments](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/AlignmentInBioconductor.html#15) for the index to align to, the FASTQ(s) to align, the name of output BAM, the mode of alignment (rna or dna), and fragment length filters. We will go through alignment of the CUT&RUN. ``` r myMapped <- align("mm10_mainchrs", readfile1 = read1_toAlign, readfile2 = read2_toAlign, type = "dna", output_file = "SOX9CNR_W6_rep1.bam", nthreads = 4, minFragLength = 0, maxFragLength = 2000) ``` --- ## Sort and index CUT&RUN reads As before, we sort and index our files using the [**Rsamtools** packages **sortBam()** and **indexBam()** functions respectively.](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/AlignedDataInBioconductor.html#10) The resulting sorted and indexed BAM file is now ready for use in external programs such as IGV as well as for further downstream analysis in R. ``` r library(Rsamtools) sortBam("SOX9CNR_W6_rep1.bam", "SOX9CNR_W6_rep1_sorted") indexBam("SOX9CNR_W6_rep1_sorted.bam") ``` --- ## Create a bigWig We can also create a bigWig from our sorted, indexed BAM file to allow us to quickly review our data in IGV. First we use the [**coverage()** function to create an **RLElist object** containing our coverage scores.](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/Summarising_Scores_In_Bioconductor.html#13) ``` r forBigWig <- coverage("SOX9CNR_W6_rep1_sorted.bam") forBigWig ``` ``` RleList of length 22 $chr1 integer-Rle of length 195471971 with 1828459 runs Lengths: 3004130 40 121 40 1365 34 6 34 113 ... 13 9 8 6 4 30 6 100490 Values : 0 1 0 1 0 1 2 1 0 ... 6 3 2 3 4 2 1 0 $chr2 integer-Rle of length 182113224 with 1855901 runs Lengths: 3050000 31 4 71 9 30 1 3 3 ... 119 39 1 61 6 34 6 100214 Values : 0 3 2 0 1 2 1 2 1 ... 0 2 1 0 1 2 1 0 ``` --- ## Create a bigWig We can now export our [**RLElist object** as a bigWig using the **rtracklayer** package's **export.bw()** function.](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/GenomicScores_In_Bioconductor.html#40) ``` r library(rtracklayer) export.bw(forBigWig, con = "SOX9CNR_W6_rep1.bw") ``` --- ## Create a bigWig We may wish to normalize our coverage to allow us to compare enrichment across samples. First we we can retrieve and plot the number of mapped reads using [the **idxstatsBam()** function.](https://rockefelleruniversity.github.io/Bioconductor_Introduction//presentations/slides/AlignedDataInBioconductor.html#16) ``` r mappedReads <- idxstatsBam("SOX9CNR_W6_rep1_sorted.bam") TotalMapped <- sum(mappedReads[, "mapped"]) TotalMapped ``` ``` [1] 24848310 ``` --- ## Create a bigWig To make the normalized bigwig we can use the [**weight** parameter in the **coverage()**](https://rockefelleruniversity.github.io/Bioconductor_Introduction/presentations/slides/Summarising_Scores_In_Bioconductor.html#20) to scale our reads to the number of mapped reads multiplied by a million (reads per million). This bigwig is available [here on dropbox](https://www.dropbox.com/scl/fo/zsxaw6uv19ar8k0svcq4a/ABBTWY3xEREQrKGU3hnaMuQ?rlkey=gk7zdl27xagl5nhz6k8amz5yy&st=2hmzbrvp&dl=0) for download if you want to check it out. ``` r forBigWig <- coverage("SOX9CNR_W6_rep1_sorted.bam", weight = (10^6)/TotalMapped) forBigWig export.bw(forBigWig, con = "SOX9CNR_W6_rep1_weighted.bw") ``` --- # BAM and bigWig  --- class: inverse, center, middle # Post alignment processing - CUT&RUN <html><div style='float:left'></div><hr color='#EB811B' size=1px width=720px></html> --- ## Peak calling software - CUT&RUN To identify regions of SOX9 transcription factor binding we can use a **peak caller**. Although many peak callers are available in R and beyond, one the most popular and widely used peak caller remains [**MACS**](https://macs3-project.github.io/MACS/). Another peak caller, [**SEACR**](https://github.com/FredHutch/SEACR), was developed specificlly for CUT&RUN. We will use both. <img src="imgs/krt5_signal_peakintro.png" alt="igv" height="300" width="700"> --- ## Consider an appropriate input While we don't have one in this test data set, inputs are sometimes used in peak calling. They are theoretically less critical in CUT&RUN compared to ChIPseq due to lower background signal, but this is not always the case. * Input samples in CUT&RUN are typically made from IgG pulldowns * Allows for control of artefact regions which occur across samples. For example: When using tumour samples for CUT&RUN, it might be important to have matched input samples. Differing conditions of same tissue may share common input. --- ## Install software with conda and Herper We will install a version of MACS that is not available in R. It is possible to install it on Mac and Linux using the [Anaconda](https://anaconda.org/bioconda/MACS3) package repository (unfortunately there is not a Windows implementation). Anaconda is a huge collection of version controlled packages that can be installed through the conda package management system. With conda it is easy to create and manage environments that have a variety of different packages in them. We can interact with the Anaconda package system using the R package [Herper](https://bioconductor.org/packages/release/bioc/html/Herper.html). This was created by us here at the BRC and is part of Bioconductor. ``` r BiocManager::install("Herper") library(Herper) ``` --- ## Install software with conda and Herper First, we will use Herper to install MACS with the `install_CondaTools` function. This will automatically install miniconda, a lightweight version of Anaconda. We just need to tell `install_CondaTools` the following: * what tool/s you want * the name of the environment you want to build. * path where the miniconda currently is or should be installed * whether you are updating a current environment (optional) We will install MACS (version 3), in addition to samtools and bedtools, which will be used to prepare our BAM files for use by MACS and SEACR. ``` r dir.create("miniconda") macs_paths <- install_CondaTools(tools = c("macs3", "samtools", "bedtools"), env = "CnR_analysis", pathToMiniConda = "miniconda") ``` --- ## Install software with conda and Herper Behind the scenes, Herper will install the most minimal version of conda (called miniconda), and then will create a new environment into which MACS, bedtools, and samtools will be installed. When you run the function it returns and prints out where the software is installed. ``` r macs_paths ``` ``` $pathToConda [1] "miniconda/bin/conda" $environment [1] "CnR_analysis" $pathToEnvBin [1] "miniconda/envs/CnR_analysis/bin" ``` --- ## Install software with conda and Herper After the software are installed, we can then use them using the `with_CondaEnv` function from Herper paired with the `system` function that allows us to run terminal commands from R. ``` r Herper::with_CondaEnv("CnR_analysis", pathToMiniConda = "miniconda", system("samtools sort -h")) ``` ``` samtools: invalid option -- h Usage: samtools sort [options...] [in.bam] Options: -l INT Set compression level, from 0 (uncompressed) to 9 (best) -u Output uncompressed data (equivalent to -l 0) -m INT Set maximum memory per thread; suffix K/M/G recognized [768M] -M Use minimiser for clustering unaligned/unplaced reads -R Do not use reverse strand (only compatible with -M) -K INT Kmer size to use for minimiser [20] -I FILE Order minimisers by their position in FILE FASTA -w INT Window size for minimiser indexing via -I ref.fa [100] -H Squash homopolymers when computing minimiser -n Sort by read name (natural): cannot be used with samtools index -N Sort by read name (ASCII): cannot be used with samtools index -t TAG Sort by value of TAG. Uses position as secondary index (or read name if -n is set) -o FILE Write final output to FILE rather than standard output -T PREFIX Write temporary files to PREFIX.nnnn.bam --no-PG Do not add a PG line --template-coordinate Sort by template-coordinate --input-fmt-option OPT[=VAL] Specify a single input file format option in the form of OPTION or OPTION=VALUE -O, --output-fmt FORMAT[,OPT[=VAL]]... Specify output format (SAM, BAM, CRAM) --output-fmt-option OPT[=VAL] Specify a single output file format option in the form of OPTION or OPTION=VALUE --reference FILE Reference sequence FASTA FILE [null] -@, --threads INT Number of additional threads to use [0] --write-index Automatically index the output files [off] --verbosity INT Set level of verbosity ``` --- ## Proper pairs - CUT&RUN Now that we have our aligned paired-end data contained in BAM files, and the software installed to eventually call peaks, we can assess some initial QC metrics like alignment quality and fragment length. We will read our newly aligned data using the GenomicAlignments package. Here we only want reads which are properly paired so we will use the **ScanBamParam()** and **scanBamFlag()** functions to control what will be read into R. We set the **scanBamFlag()** function parameters **isProperPair** to TRUE so as to only read in reads paired in alignment. ``` r library(GenomicAlignments) flags = scanBamFlag(isProperPair = TRUE) ``` --- ## Proper pairs - CUT&RUN We can now use these flags with the **ScanBamParam()** function to read in only properly paired reads. We additionally specify information to be read into R using the **what** parameter. Importantly we specify the insert size information - **isize**. To reduce memory footprint we read only information from chromosome 18 by specifying a GRanges object to **which** parameter. ``` r myParam = ScanBamParam(flag = flags, what = c("qname", "mapq", "isize", "flag")) myParam ``` ``` ## class: ScanBamParam ## bamFlag (NA unless specified): isProperPair=TRUE ## bamSimpleCigar: FALSE ## bamReverseComplement: FALSE ## bamTag: ## bamTagFilter: ## bamWhich: 0 ranges ## bamWhat: qname, mapq, isize, flag ## bamMapqFilter: NA ``` --- ## GAlignmentPairs - CUT&RUN Now we have set up the **ScanBamParam** object, we can use the **readGAlignmentPairs()** function to read in our paired-end CUT&RUN data using the **readGAlignments()** function. In the *data* folder is a BAM file contianing the reads from only chromosome 18 that we will read into R. The resulting object is a **GAlignmentPairs** object that contains information on our paired reads ``` r sortedBAM <- "data/SOX9CNR_W6_rep1_chr18_sorted.bam" cnrReads <- readGAlignmentPairs(sortedBAM, param = myParam) cnrReads[1:2, ] ``` ``` ## GAlignmentPairs object with 2 pairs, strandMode=1, and 0 metadata columns: ## seqnames strand : ranges -- ranges ## <Rle> <Rle> : <IRanges> -- <IRanges> ## [1] chr18 + : 3001178-3001217 -- 3001186-3001225 ## [2] chr18 + : 3002517-3002556 -- 3002544-3002583 ## ------- ## seqinfo: 22 sequences from an unspecified genome ``` --- ## GAlignmentPairs - CUT&RUN We access the **GAlignments** objects using the **first()** and **second()** accessor functions to gain information on the first or second read respectively. ``` r read1 <- GenomicAlignments::first(cnrReads) read2 <- GenomicAlignments::second(cnrReads) read2[1:2, ] ``` ``` ## GAlignments object with 2 alignments and 4 metadata columns: ## seqnames strand cigar qwidth start end width njunc | ## <Rle> <Rle> <character> <integer> <integer> <integer> <integer> <integer> | ## [1] chr18 - 40M 40 3001186 3001225 40 0 | ## [2] chr18 - 40M 40 3002544 3002583 40 0 | ## qname mapq isize flag ## <character> <integer> <integer> <integer> ## [1] SRR20110409.2378427 0 -48 147 ## [2] SRR20110409.10295909 10 -67 147 ## ------- ## seqinfo: 22 sequences from an unspecified genome ``` --- ## Retrieve MapQ scores - CUT&RUN One of the first we can do is obtain the distribution of MapQ scores for our read1 and read2. We can access this for each read using **mcols()** function to access the mapq slot of the GAlignments object for each read. For Rsubread, a MAPQ of 0 means that a read maps equally well to multiple locations, we want to assess if this is common and potentially remove these reads. ``` r read1MapQ <- mcols(read1)$mapq read2MapQ <- mcols(read2)$mapq read1MapQ[1:5] ``` ``` ## [1] 0 40 40 10 10 ``` --- ## MapQ score frequencies - CUT&RUN We can then use the **table()** function to summarize the frequency of scores for each read in pair. ``` r read1MapQFreqs <- table(read1MapQ) read2MapQFreqs <- table(read2MapQ) read1MapQFreqs ``` ``` ## read1MapQ ## 0 6 8 10 13 20 40 ## 3123 32 307 1848 9611 31077 357317 ``` ``` r read2MapQFreqs ``` ``` ## read2MapQ ## 0 6 8 10 13 20 40 ## 3123 50 396 2232 11486 31529 354499 ``` --- ## Plot MapQ scores - CUT&RUN Finally we can plot the distributions of MapQ for each read in pair using ggplot2. ``` r library(ggplot2) toPlot <- data.frame(MapQ = c(names(read1MapQFreqs), names(read2MapQFreqs)), Frequency = c(read1MapQFreqs, read2MapQFreqs), Read = c(rep("Read1", length(read1MapQFreqs)), rep("Read2", length(read2MapQFreqs)))) toPlot$MapQ <- factor(toPlot$MapQ, levels = unique(sort(as.numeric(toPlot$MapQ)))) ggplot(toPlot, aes(x = MapQ, y = Frequency, fill = MapQ)) + geom_bar(stat = "identity") + facet_grid(~Read) ``` <!-- --> --- ## Retrieving insert sizes - CUT&RUN Now we have reads in the paired aligned data into R, we can retrieve the insert sizes from the **mcols()** attached to **GAlignments** objects of each read pair. CUT&RUN should represent a mix of fragment lengths depending on the target. A TF will generally only be in nucleosome-free regions and will have small fragments, while a histone mark might have longer fragments. Since properly paired reads will have the same insert size length we extract insert sizes from read1. ``` r insertSizes <- abs(mcols(read1)$isize) head(insertSizes) ``` ``` ## [1] 48 67 67 67 67 67 ``` --- ## Plotting insert sizes - CUT&RUN We can use the **table()** function to retrieve a vector of the occurrence of each fragment length. ``` r fragLenSizes <- table(insertSizes) fragLenSizes[1:5] ``` ``` ## insertSizes ## 30 31 32 33 34 ## 1 574 746 1067 1386 ``` --- ## Plotting insert sizes - CUT&RUN Now we can use the newly acquired insert lengths for chromosome 18 to plot the distribution of all fragment lengths. ``` r library(ggplot2) toPlot <- data.frame(InsertSize = as.numeric(names(fragLenSizes)), Count = as.numeric(fragLenSizes)) fragLenPlot <- ggplot(toPlot, aes(x = InsertSize, y = Count)) + geom_line() fragLenPlot + theme_bw() ``` <!-- --> --- ## Plotting insert sizes - CUT&RUN We can now annotate our nucleosome free (< 120bp) regions and see a clear bi-modal distribution of reads separated by this distinction. ``` r fragLenPlot + geom_vline(xintercept = c(120), colour = "darkgreen") + theme_bw() ``` <!-- --> --- ## Filter reads - CUT&RUN We will filter out fragments where either read has a MAPQ of 0 and reads shorter than 1000bp. MAPQ of zero means a read mapped equally well to at least two places in the genome. Note: For TF CUT&RUN where we expect binding in nucleosome free regions, sometimes it is common to only take reads shorter than 120bp. In the exercises you have a chance to compare both strategies. ``` r cnrReads_filter <- cnrReads[insertSizes < 1000 & (!mcols(read1)$mapq == 0 | !mcols(read2)$mapq == 0)] ``` --- ## Remove blacklist - CUT&RUN ChIPseq and CUT&RUN will often show the presence of common artifacts, such as ultra-high signal regions. Such regions can confound peak calling, fragment length estimation and QC metrics. Anshul Kundaje created the DAC blacklist as a reference to help deal with these regions. We will use this reference to remove these reads from the BAM file. <div align="center"> <img src="imgs/blacklist.png" alt="offset" height="400" width="350"> </div> --- ## Remove blacklist regions - CUT&RUN You can find a Blacklist for most genomes at the [Boyle lab Github page](https://github.com/Boyle-Lab/Blacklist/tree/master/lists). The most recent version (V2) for mm10 is available in the *data* folder of this course. We read it into R as a GRanges object using the `import` function from rtracklayer. ``` r library(rtracklayer) blacklist <- "data/mm10-blacklist.v2.bed" bl_regions <- rtracklayer::import(blacklist) bl_regions ``` ``` ## GRanges object with 3435 ranges and 1 metadata column: ## seqnames ranges strand | name ## <Rle> <IRanges> <Rle> | <character> ## [1] chr10 1-3135400 * | High Signal Region ## [2] chr10 3218901-3276600 * | Low Mappability ## [3] chr10 3576901-3627700 * | Low Mappability ## [4] chr10 4191101-4197600 * | Low Mappability ## [5] chr10 4613501-4615400 * | High Signal Region ## ... ... ... ... . ... ## [3431] chrY 6530201-6663200 * | High Signal Region ## [3432] chrY 6760201-6835800 * | High Signal Region ## [3433] chrY 6984101-8985400 * | High Signal Region ## [3434] chrY 10638501-41003800 * | High Signal Region ## [3435] chrY 41159201-91744600 * | High Signal Region ## ------- ## seqinfo: 21 sequences from an unspecified genome; no seqlengths ``` --- ## Remove blacklist regions - CUT&RUN The `granges` function finds the range spanning the whole fragment (5' read 1 to 3' read 2) when given a GAlignmentPairs object. We then identify the fragments from this GRanges object that overlap the blacklist regions. ``` r fragment_spans <- granges(cnrReads_filter) bl_remove <- overlapsAny(fragment_spans, bl_regions) table(bl_remove) ``` ``` ## bl_remove ## FALSE TRUE ## 394289 5893 ``` --- ## Write out filtered BAM file - CUT&RUN This logical vector is then used to filter out these fragments that overlap blacklist. It is helpful to include the names for each read in the BAM file for the peak callers, so we unlist the GAlignmentPairs object so each read now has its own row, and then the names are set from the metadata of the object. Lastly, the BAM file is written to file. ``` r cnrReads_filter_noBL <- cnrReads_filter[!bl_remove] cnrReads_unlist <- unlist(cnrReads_filter_noBL) names(cnrReads_unlist) <- mcols(cnrReads_unlist)$qname filter_bam <- gsub("sorted.bam", "filter.bam", basename(sortedBAM)) rtracklayer::export(cnrReads_unlist, filter_bam, format = "bam") ``` --- ## Prepare BAM for peak calling - CUT&RUN The `samtools fixmate` function is helpful to clean up paired end BAM files. It will repair some of the metadata in the BAM file, which can be especially helpful when doing filtering and reading/writing of paired-end data in R. Below we use Herper to call samtools as the fixmate function is not available with Rsamtools. You'll notice a few extra tricks in the `with_CondaEnv` function call. We can use brackets {} to run multiple lines of code, and we also utilize the `tempfile` function to create a temporary path. These files will be deleted with the R session ends. ``` r forPeak_bam <- gsub("_filter.bam", "_forPeak.bam", filter_bam) Herper::with_CondaEnv("CnR_analysis", pathToMiniConda = "miniconda", { tempBam <- paste0(tempfile(), ".bam") system(paste("samtools sort", "-n", "-o", tempBam, filter_bam, sep = " ")) system(paste("samtools fixmate", "-m", tempBam, forPeak_bam, sep = " ")) }) ``` --- ## Prepare BAM for peak calling - CUT&RUN The peak caller MACS requires the file to be sorted by coordinate. This can be done in R with the `sortBam` function. Note: This could also be done using `samtools sort` on the command line using Herper, as shown in the previous slide when we sorted by name before using `samtools fixmate`. ``` r forMacs_bam <- gsub("_forPeak.bam", "_macs.bam", forPeak_bam) sortBam(forPeak_bam, gsub(".bam", "", forMacs_bam)) indexBam(forMacs_bam) ``` --- class: inverse, center, middle # Peak calling - CUT&RUN <html><div style='float:left'></div><hr color='#EB811B' size=1px width=720px></html> --- ## CUT&RUN peak calling with MACS MACS3 is installed and ready to use in our conda environment. The `macs3 callpeak` function is used to call peaks. To run MACS to we simply need to supply: * A BAM file to find enriched regions in. (specified after **-t**) * File format (BAMPE for paired end, specified after **-f**) * A Name for peak calls (specified after **–name**). * An output folder to write peaks into (specified after **–outdir**). * Optionally, and sometimes recommended, we can identify a control file (we don't have one, but would be specified after **–c**). Example command line function call: ``` macs3 callpeak -t pairedEnd.bam -f BAMPE --outdir path/to/output/ --n pairedEndPeakName ``` --- ## CUT&RUN peak calling with MACS If we have sequenced paired-end data then we do know the fragment lengths and can provide BAM files to MACS which have been prefiltered to properly paired We have to simply tell MACS that the data is paired using the format argument. This is important as MACS will guess it is single-end BAM by default. ``` r peaks_name <- gsub("_macs.bam", "", basename(forMacs_bam)) with_CondaEnv("CnR_analysis", pathToMiniConda = "miniconda", system2(command = "macs3", args = c("callpeak", "-t", forMacs_bam, "-f", "BAMPE", "--outdir", ".", "-n", peaks_name))) ``` --- ## MACS output files Following MACS peak calling we would get 3 files. * {name}.narrowPeak -- Narrow peak format suitable for IGV and further analysis * {name}.peaks.xls -- Peak table suitable for review in excel.(not actually a xls but a tsv) * {name}.summits.bed -- Summit positions for peaks useful for finding motifs and plotting <img src="imgs/macsIGV.png" alt="igv" height="450" width="800"> --- ## CUT&RUN peak calling with SEACR [SEACR](https://epigeneticsandchromatin.biomedcentral.com/articles/10.1186/s13072-019-0287-4) is a peak calling algorithm that was written by same lab that developed CUT&RUN (Henikoff). It is meant to deal with the lower read depths and sparser background signal from CUT&RUN. SEACR requires a bedgraph file, which like a BAM file contains information about genomic CUT&RUN signal. In the following slides we will make a paired end bed file, filter it and then write out as a bedgraph. SEACR is built for paired end data, and first we make a bedpe file using the function `bamtobed` from bedtools. We can use our conda environment we made previously to do this. ``` r # use the BAM with blacklist reads removed (from MACS section) bedpe <- gsub("\\.bam", "\\.bed", forPeak_bam) with_CondaEnv("CnR_analysis", pathToMiniConda = "miniconda", system(paste("bedtools bamtobed -bedpe -i", forPeak_bam, ">", bedpe, sep = " "))) ``` --- ## CUT&RUN peak calling with SEACR Lastly we make a bedgraph file with the `genomecov` function from bedtools. A key input to this function is a file with chromosome lengths, and we can extract this from the FASTA file. NOTE: The **chrom.lengths.txt** is already available in the 'data' folder of the course. ``` r library(dplyr) library(tibble) indexFa("BSgenome.Mmusculus.UCSC.mm10.mainChrs.fa") seqlengths(Rsamtools::FaFile("BSgenome.Mmusculus.UCSC.mm10.mainChrs.fa")) %>% as.data.frame() %>% rownames_to_column() %>% write.table(file = "chrom.lengths.txt", row.names = FALSE, col.names = FALSE, quote = FALSE, sep = "\t") ``` --- ## CUT&RUN peak calling with SEACR Then we make the bedgraph file with `genomecov` using the file containing chromosome lengths in the '-g' argument and the bedpe file in the '-i' argument. The result is written to a file that is our bedgraph. ``` r bedgraph <- gsub("\\.bed", "\\.bedgraph", bedpe) with_CondaEnv("CnR_analysis", pathToMiniConda = "miniconda", system(paste("bedtools genomecov -bg -i", bedpe, "-g", "data/chrom.lengths.txt", ">", bedgraph, sep = " "))) ``` --- ## CUT&RUN peak calling with SEACR Now we have the file required to run SEACR, but we need the SEACR executable script to call peaks. We can download this from the Github page for SEACR. After it's downloaded you might see the following error when running the script, indicating the permissions need to be changed. ``` r download.file("https://github.com/FredHutch/SEACR/archive/refs/tags/v1.4-beta.2.zip", destfile = "~/Downloads/SEACR_v1.4.zip") unzip("~/Downloads/SEACR_v1.4.zip", exdir = "~/Downloads/SEACR_v1.4") seacr_path <- "~/Downloads/SEACR_v1.4/SEACR-1.4-beta.2/SEACR_1.4.sh" system(paste(seacr_path, "-h")) ``` ``` trying URL 'https://github.com/FredHutch/SEACR/archive/refs/tags/v1.4-beta.2.zip' downloaded 3.0 MB sh: /Users/douglasbarrows/Downloads/SEACR_v1.4/SEACR-1.4-beta.2/SEACR_1.4.sh: Permission denied ``` --- ## CUT&RUN peak calling with SEACR The permissions can be changed by running the `chmod` function. Here we make it accessible to anyone, but this can be fine-tuned depending on your system. ``` r system(paste("chmod 777", seacr_path)) system(paste(seacr_path, "-h")) ``` ``` SEACR: Sparse Enrichment Analysis for CUT&RUN Usage: bash SEACR_1.4.sh -b <experimental bedgraph>.bg -c [<control bedgraph>.bg | <FDR threshold>] -n [norm | non] -m [relaxed | stringent] -o output_prefix -e [double] -r [yes | no] Required input fields: -b|--bedgraph Experimental bedgraph file -c|--control Control bedgraph file -n|--normalize Internal normalization (norm|non) -m|--mode Stringency mode (stringent|relaxed) -o|--output Output prefix Optional input fields: -e|--extension Peak extension constant for peak merging (Default=0.1) -r|--remove Remove peaks overlapping IgG peaks (yes|no, Default=yes) -h|--help Print help screen Output file: <output prefix>.[stringent | relaxed].bed (Bed file of enriched regions) Output data structure: <chr> <start> <end> <AUC> <max signal> <max signal region> Description of output fields: Field 1: Chromosome Field 2: Start coordinate Field 3: End coordinate Field 4: Total signal contained within denoted coordinates Field 5: Maximum bedgraph signal attained at any base pair within denoted coordinates Field 6: Region representing the farthest upstream and farthest downstream bases within the denoted coordinates that are represented by the maximum bedgraph signal Examples: bash SEACR_1.4.sh -b target.bedgraph -c IgG.bedgraph -n norm -m stringent -o output Calls enriched regions in target data using normalized IgG control track with stringent threshold and default 10% peak extension for merging bash SEACR_1.4.sh -b target.bedgraph -c IgG.bedgraph -n non -m relaxed -o output -e 0.5 Calls enriched regions in target data using non-normalized IgG control track with relaxed threshold and 50% peak extension for merging bash SEACR_1.4.sh -b target.bedgraph -c 0.01 -n non -m stringent -o output Calls enriched regions in target data by selecting the top 1% of regions by total signal ``` --- ## CUT&RUN peak calling with SEACR Now we can call peaks with the bedgraph file we generated and the SEACR.sh script. Here we set a few key arguments for peaks calling: * -b: the path to the bedgraph file * -c: either a path to a control file (eg IgG) or a number between 0 and 1. If its a number, then this fraction will control the top peaks returned based on the signal in the peak + below we will only return the top 1% of peaks * -n: 'norm' if using control, 'non' if not * -m: 'relaxed' or 'stringent' mode ``` r seacr_prefix <- gsub("\\.bedgraph", "_top01", bedgraph) system(paste(seacr_path, "-b", bedgraph, "-c 0.01", "-n non", "-m stringent", "-o", seacr_prefix)) ``` --- ## CUT&RUN peak calling with SEACR Following peak calling we get one file. * {prefix}.stringent.bed -- a bed file with columns showing signal information that is suitable for IGV and further analysis We can look at this peak file in IGV and compare to the MACS peak calls: <img src="imgs/macs_and_seacr_igv.png" alt="igv" height="400" width="800"> --- class: inverse, center, middle # Post alignment processing and peak calling - ATACseq <html><div style='float:left'></div><hr color='#EB811B' size=1px width=720px></html> --- class: inverse, center, middle # Quality control - CUT&RUN <html><div style='float:left'></div><hr color='#EB811B' size=1px width=720px></html> --- ## Quality Control CUT&RUN has many sources of potential noise including * Varying efficiency of antibodies * Non-specific binding * Library complexity * artifacts and background Many of these sources of noise can be assessed using some well established methodology, many borrowed from ChIPseq.. --- ## Mapped reads First, we can retrieve and plot the number of mapped reads using [the **idxstatsBam()** function.](https://rockefelleruniversity.github.io/Bioconductor_Introduction//presentations/slides/AlignedDataInBioconductor.html#16) ``` r mappedReads <- idxstatsBam("~/Downloads/SOX9CNR_W6_rep1_sorted.bam") mappedReads <- mappedReads[mappedReads$seqnames %in% paste0("chr", c(1:19, "X", "Y", "M")), ] TotalMapped <- sum(mappedReads[, "mapped"]) ggplot(mappedReads, aes(x = seqnames, y = mapped)) + geom_bar(stat = "identity") + coord_flip() ``` <!-- --> --- ## Quality metrics for CUT&RUN The [**ChIPQC package**](https://bioconductor.org/packages/release/bioc/html/ChIPQC.html) wraps some of the metrics into a Bioconductor package and takes care to measure these metrics under the appropriate condition. To run a single sample we can use the **ChIPQCsample()** function, the relevant **unfiltered** BAM file and we are recommended to supply a **blacklist** as a BED file or GRanges and Genome name. We can use the same blacklist bed file that we used for peak calling. This file is available in the *data* folder of this course and also at the [Boyle lab Github page](https://github.com/Boyle-Lab/Blacklist/tree/master/lists). ``` r QCresult <- ChIPQCsample(reads = "/pathTo/myCnRreads.bam", genome = "mm10", peaks = "/pathTo/myCnRpeaks.bed", blacklist = "/pathTo/mm10_Blacklist.bed") ``` --- ## Quality control with ChIPQC We can then provide an initial analysis of our CUT&RUN samples quality using the **ChIPQCsample()** function from the [**ChIPQC** package.](http://bioconductor.org/packages/stats/bioc/ChIPQC/) Here we evaluate the quality of samples we aligned in the prior session with Rsubread. The returned object is a **ChIPQCsample** object. Note: We use the BAM file before we filtered out low quality reads and blacklist so we can incorporate those reads into our QC analysis. ``` r library(ChIPQC) blklist <- rtracklayer::import.bed("data/mm10-blacklist.v2.bed") qc_sox9_rep1 <- ChIPQCsample("data/SOX9CNR_W6_rep1_chr18_sorted.bam", annotation = "mm10", peaks = "data/SOX9CNR_W6_rep1_chr18_peaks.narrowPeak", blacklist = blklist, chromosomes = "chr18") ``` ``` ## 1 / 300 2 / 300 3 / 300 4 / 300 5 / 300 6 / 300 7 / 300 8 / 300 9 / 300 10 / 300 11 / 300 12 / 300 13 / 300 14 / 300 15 / 300 16 / 300 17 / 300 18 / 300 19 / 300 20 / 300 21 / 300 22 / 300 23 / 300 24 / 300 25 / 300 26 / 300 27 / 300 28 / 300 29 / 300 30 / 300 31 / 300 32 / 300 33 / 300 34 / 300 35 / 300 36 / 300 37 / 300 38 / 300 39 / 300 40 / 300 41 / 300 42 / 300 43 / 300 44 / 300 45 / 300 46 / 300 47 / 300 48 / 300 49 / 300 50 / 300 51 / 300 52 / 300 53 / 300 54 / 300 55 / 300 56 / 300 57 / 300 58 / 300 59 / 300 60 / 300 61 / 300 62 / 300 63 / 300 64 / 300 65 / 300 66 / 300 67 / 300 68 / 300 69 / 300 70 / 300 71 / 300 72 / 300 73 / 300 74 / 300 75 / 300 76 / 300 77 / 300 78 / 300 79 / 300 80 / 300 81 / 300 82 / 300 83 / 300 84 / 300 85 / 300 86 / 300 87 / 300 88 / 300 89 / 300 90 / 300 91 / 300 92 / 300 93 / 300 94 / 300 95 / 300 96 / 300 97 / 300 98 / 300 99 / 300 100 / 300 101 / 300 102 / 300 103 / 300 104 / 300 105 / 300 106 / 300 107 / 300 108 / 300 109 / 300 110 / 300 111 / 300 112 / 300 113 / 300 114 / 300 115 / 300 116 / 300 117 / 300 118 / 300 119 / 300 120 / 300 121 / 300 122 / 300 123 / 300 124 / 300 125 / 300 126 / 300 127 / 300 128 / 300 129 / 300 130 / 300 131 / 300 132 / 300 133 / 300 134 / 300 135 / 300 136 / 300 137 / 300 138 / 300 139 / 300 140 / 300 141 / 300 142 / 300 143 / 300 144 / 300 145 / 300 146 / 300 147 / 300 148 / 300 149 / 300 150 / 300 151 / 300 152 / 300 153 / 300 154 / 300 155 / 300 156 / 300 157 / 300 158 / 300 159 / 300 160 / 300 161 / 300 162 / 300 163 / 300 164 / 300 165 / 300 166 / 300 167 / 300 168 / 300 169 / 300 170 / 300 171 / 300 172 / 300 173 / 300 174 / 300 175 / 300 176 / 300 177 / 300 178 / 300 179 / 300 180 / 300 181 / 300 182 / 300 183 / 300 184 / 300 185 / 300 186 / 300 187 / 300 188 / 300 189 / 300 190 / 300 191 / 300 192 / 300 193 / 300 194 / 300 195 / 300 196 / 300 197 / 300 198 / 300 199 / 300 200 / 300 201 / 300 202 / 300 203 / 300 204 / 300 205 / 300 206 / 300 207 / 300 208 / 300 209 / 300 210 / 300 211 / 300 212 / 300 213 / 300 214 / 300 215 / 300 216 / 300 217 / 300 218 / 300 219 / 300 220 / 300 221 / 300 222 / 300 223 / 300 224 / 300 225 / 300 226 / 300 227 / 300 228 / 300 229 / 300 230 / 300 231 / 300 232 / 300 233 / 300 234 / 300 235 / 300 236 / 300 237 / 300 238 / 300 239 / 300 240 / 300 241 / 300 242 / 300 243 / 300 244 / 300 245 / 300 246 / 300 247 / 300 248 / 300 249 / 300 250 / 300 251 / 300 252 / 300 253 / 300 254 / 300 255 / 300 256 / 300 257 / 300 258 / 300 259 / 300 260 / 300 261 / 300 262 / 300 263 / 300 264 / 300 265 / 300 266 / 300 267 / 300 268 / 300 269 / 300 270 / 300 271 / 300 272 / 300 273 / 300 274 / 300 275 / 300 276 / 300 277 / 300 278 / 300 279 / 300 280 / 300 281 / 300 282 / 300 283 / 300 284 / 300 285 / 300 286 / 300 287 / 300 288 / 300 289 / 300 290 / 300 291 / 300 292 / 300 293 / 300 294 / 300 295 / 300 296 / 300 297 / 300 298 / 300 299 / 300 300 / 300 ## done ## Calculating coverage ## [1] 1 ``` ``` r class(qc_sox9_rep1) ``` ``` ## [1] "ChIPQCsample" ## attr(,"package") ## [1] "ChIPQC" ``` --- ## Quality control with ChIPQC We can display our **ChIPQCsample** object which will show a basic summary of our CUT&RUN quality. ``` r qc_sox9_rep1 ``` ``` ## ChIPQCsample ``` ``` ## Number of Mapped reads: 829781 ``` ``` ## Number of Mapped reads passing MapQ filter: 788532 ``` ``` ## Percentage Of Reads as Non-Duplicates (NRF): 100(0) ``` ``` ## Percentage Of Reads in Blacklisted Regions: 2 ``` ``` ## SSD: 2.61011887557391 ``` ``` ## Fragment Length Cross-Coverage: 0.0133142837287353 ``` ``` ## Relative Cross-Coverage: 1.06351020408163 ``` ``` ## Percentage Of Reads in GenomicFeature: ``` ``` ## ProportionOfCounts ## Peaks 0.00000000 ## BlackList 0.02050392 ## LongPromoter20000to2000 0.27655060 ## Promoters2000to500 0.03991975 ## Promoters500 0.03665038 ## All5utrs 0.01701516 ## Alltranscripts 0.57769628 ## Allcds 0.01479838 ## Allintrons 0.54665125 ## All3utrs 0.01184733 ``` ``` ## Percentage Of Reads in Peaks: 0 ``` ``` ## Number of Peaks: 0 ``` ``` ## GRanges object with 0 ranges and 2 metadata columns: ## seqnames ranges strand | Counts bedRangesSummitsTemp ## <Rle> <IRanges> <Rle> | <integer> <numeric> ## ------- ## seqinfo: 1 sequence from an unspecified genome; no seqlengths ``` --- ## QC of multiple samples It is helpful to review CUT&RUN quality versus other samples (including any IgG) which we are using (or even external data if you do not have your own). This will allow us to identify expected patterns of CUT&RUN enrichment in our samples versus controls as well as spot any outlier samples by these metrics. We can run **ChIPQCsample()** on all our samples of interest using an **lapply**. First we make vectors of BAM files and peak files where the indeces of BAMs and corresponding peaks line up. If you want to try on your own, the BAM files are [available on dropbox](https://www.dropbox.com/scl/fo/f8q9iz5j1mic0wrhzei2c/AEIL42gWMI-Tc_OwFxc5wOA?rlkey=gxl9u7rqk783zuz4tezj9e3pu&st=qzh2allk&dl=0), but be aware that they are big files. ``` r bamsToQC <- c("~/Downloads/SOX9CNR_D0_rep1_sorted.bam", "~/Downloads/SOX9CNR_D0_rep2_sorted.bam", "~/Downloads/SOX9CNR_W6_rep1_sorted.bam", "~/Downloads/SOX9CNR_W6_rep2_sorted.bam") peaksToQC <- c("data/peaks/SOX9CNR_D0_rep1_macs_peaks.narrowPeak", "data/peaks/SOX9CNR_D0_rep2_macs_peaks.narrowPeak", "data/peaks/SOX9CNR_W6_rep1_macs_peaks.narrowPeak", "data/peaks/SOX9CNR_W6_rep2_macs_peaks.narrowPeak") ``` --- ## QC of multiple samples Then we can run the `ChIPQCsample` function using *lapply* while cycling through the paths to BAM and peak files. Here we limit the analyis to chromosome 18. ``` r myQC <- lapply(seq_along(bamsToQC), function(x) { ChIPQCsample(bamsToQC[x], annotation = "mm10", peaks = peaksToQC[x], blacklist = blklist, chromosomes = "chr18") }) names(myQC) <- basename(bamsToQC) ``` --- ## QC of multiple samples All ChIPQC functions can work with a named list of **ChIPQCsample** objects to aggregate scores into table as well as plots. Here we use the **QCmetrics()** function to give an overview of quality metrics. ``` r QCmetrics(myQC) ``` ``` ## Reads Map% Filt% Dup% ReadL FragL RelCC SSD RiP% RiBL% ## SOX9CNR_D0_rep1_sorted.bam 3858873 100 7.49 0 36 79 2.000 3.23 5.59 2.70 ## SOX9CNR_D0_rep2_sorted.bam 2310101 100 9.05 0 34 81 3.290 3.50 1.22 2.72 ## SOX9CNR_W6_rep1_sorted.bam 835558 100 5.05 0 36 73 0.975 2.66 32.80 1.91 ## SOX9CNR_W6_rep2_sorted.bam 1600013 100 5.49 0 35 71 0.348 5.60 25.90 1.97 ``` --- class: inverse, center, middle # Blacklists and SSD <html><div style='float:left'></div><hr color='#EB811B' size=1px width=720px></html> --- ## Blacklists As we discussed while calling peaks, CUT&RUN will often show the presence of common artifacts, such as ultra-high signal regions. Such regions can confound peak calling, fragment length estimation and QC metrics. Anshul Kundaje created the DAC blacklist as a reference to help deal with these regions. <div align="center"> <img src="imgs/blacklist.png" alt="offset" height="400" width="400"> </div> --- ## Blacklists and SSD SSD is one of these measures that is sensitive to blacklisted artifacts. SSD is a measure of standard deviation of signal across the genome with higher scores reflecting significant pile-up of reads. SSD can therefore be used to assess both the extent of ultra high signals and the signal. But first blacklisted regions must be removed. <div align="center"> <img src="imgs/ssdAndBlacklist.png" alt="offset" height="400" width="300"> </div> --- ## Standardized Standard Deviation ChIPQC calculates SSD before and after removing signal coming from Blacklisted regions. The **plotSSD()** function plots samples's pre-blacklisting score in **red** and post-blacklisting score in **blue**. Blacklisting does not have a huge impact on SSD in our samples, suggesting a strong peak signal. ``` r plotSSD(myQC) + xlim(0, 7) ``` <!-- --> --- ## Standardized Standard Deviation This is not always the case. Sometimes you will much higher pre-blacklist SSD than post-blacklist SSD. This would indicate a strong background signal in blacklisted regions for that sample. Below is an example of this from another dataset: <img src="imgs/blacklist_ssd.png"height="200" width="600"> --- class: inverse, center, middle # Library complexity and enrichment <html><div style='float:left'></div><hr color='#EB811B' size=1px width=720px></html> --- ## Library complexity A potential source of noise in CUT&RUN is overamplification of the CUT&RUN library during a PCR step. This can lead to large number of duplicate reads which may confound peak calling.  --- ## Duplication We should compare our duplication rate across samples to identify any sample experiencing overamplification and so potential of a lower complexity. The **flagtagcounts()** function reports can report the number of duplicates and total mapped reads and so from there we can calculate our duplication rate. ``` r myFlags <- flagtagcounts(myQC) myFlags["DuplicateByChIPQC", ]/myFlags["Mapped", ] ``` ``` ## SOX9CNR_D0_rep1_sorted.bam SOX9CNR_D0_rep2_sorted.bam SOX9CNR_W6_rep1_sorted.bam ## 0.18044154 0.25100764 0.22392940 ## SOX9CNR_W6_rep2_sorted.bam ## 0.05412144 ``` --- ## Enrichment for reads across genes We can also use ChIPQC to review where our distribution of reads across gene features using the **plotRegi()** function. Here we expect CUT&RUN signal to be stronger in 5'UTRs and promoters when compared to input samples. ``` r p <- plotRegi(myQC) ``` --- ## Enrichment for reads across genes. ``` r p ``` <!-- -->